I am happy to announce that the German Research Foundation (DFG) selected my project proposal “Towards a neural network system architecture for multimodal explanations” for funding. (DFG FKZ DY 151/2-1, Project No. 454834942)

The project will start in early summer this year and will continue for three years. I am now looking for a new team member for the open researcher position. If you are interested, please contact me via email (martin.dyrba (at) dzne.de) including your CV. A Master’s degree in one of the STEM fields is required. The candidate is envisaged to complete a PhD degree during the three years project runtime. Additionally, I am looking for student assistants to support the project.

The primary objective of this project is to investigate a system architecture and methodologies for deriving explanations from CNN models to reach a self-explanatory, human-comprehensible neural network. We will address the use case of detecting dementia and mild cognitive impairment due to Alzheimer’s disease and frontotemporal lobar degeneration in magnetic resonance imaging (MRI) data.

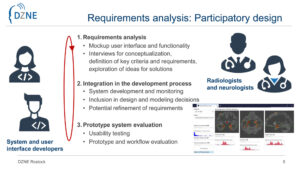

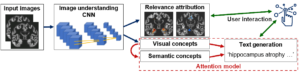

As a first step, using a participatory design approach, we will examine which diagnostic criteria are applied by neuroradiologists and which expectations they have on software for diagnostic assistance with respect to comprehensibility and explainability. In a second step, based on our existing CNN model to accurately detect AD in T1-weighted brain MRI scans, we will develop a CNN system architecture capable of learning visual and semantic concepts that encode image features of high diagnostic relevance. Here, the development of a methodology for providing visual and textual explanations, including the definition of individual components and interaction of system components is a major challenge. We aim to create an attention model that is capable of a hierarchical representation of detected concepts, their diagnostic relevance as well as the ranking, selection and combination of potential explanations. In a third step, we will develop a visual interface for providing visualizations, explanations and user interaction methods. Interactive CNN relevance maps will show the brain areas with highest contribution to the diagnostic decision based on a single person’s MRI scan. We will create a textual summary elaborating the CNN decision process and detected image concepts of diagnostic relevance. Users shall be able to obtain more detailed information on specific image areas or individual explanation sentences interactively. Finally, the prototype CNN model will be evaluated by neuroradiologists with respect to its effect on diagnostic accuracy and the radiological examination process. We will obtain information on the system’s utility and whether it actually improves the neuroradiologists’ diagnostic confidence and reduces examination time.

As a first step, using a participatory design approach, we will examine which diagnostic criteria are applied by neuroradiologists and which expectations they have on software for diagnostic assistance with respect to comprehensibility and explainability. In a second step, based on our existing CNN model to accurately detect AD in T1-weighted brain MRI scans, we will develop a CNN system architecture capable of learning visual and semantic concepts that encode image features of high diagnostic relevance. Here, the development of a methodology for providing visual and textual explanations, including the definition of individual components and interaction of system components is a major challenge. We aim to create an attention model that is capable of a hierarchical representation of detected concepts, their diagnostic relevance as well as the ranking, selection and combination of potential explanations. In a third step, we will develop a visual interface for providing visualizations, explanations and user interaction methods. Interactive CNN relevance maps will show the brain areas with highest contribution to the diagnostic decision based on a single person’s MRI scan. We will create a textual summary elaborating the CNN decision process and detected image concepts of diagnostic relevance. Users shall be able to obtain more detailed information on specific image areas or individual explanation sentences interactively. Finally, the prototype CNN model will be evaluated by neuroradiologists with respect to its effect on diagnostic accuracy and the radiological examination process. We will obtain information on the system’s utility and whether it actually improves the neuroradiologists’ diagnostic confidence and reduces examination time.

With this project, we will develop and provide a methodology for easy-to-use and self-explaining CNN models. Additionally, we will provide a framework for generating clinically useful explanations.